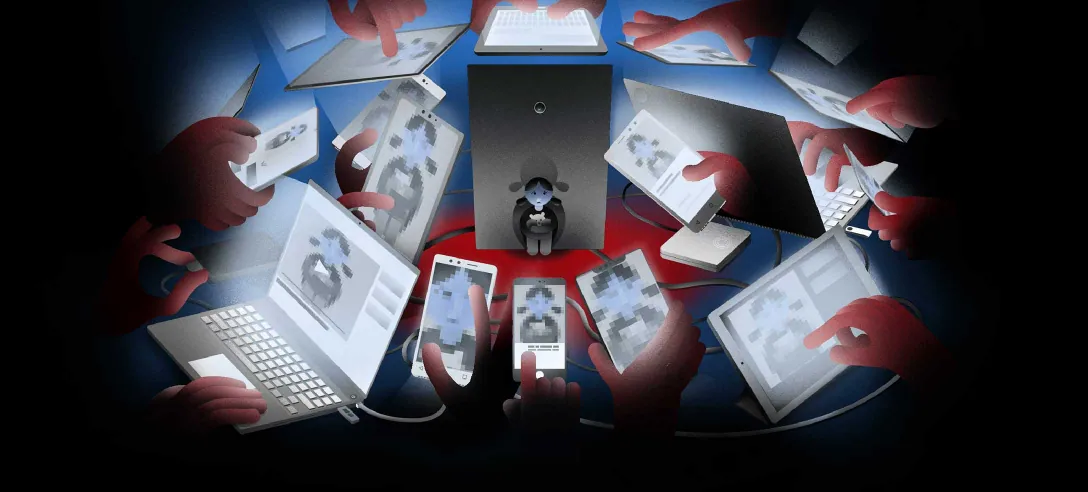

At FactorDaily, we strive to bring attention to how technology impacts society, whether for good or bad. Along with the illuminating side, our reporting has always held up on showing the dark side of technology which extends to digital misuse. Our current project focused on the easy and extensive availability of Child Sexual Abuse Material (CSAM) in the online space is one that we want to throw a spotlight on.

Online CSAM is a massive problem that our world is staring at today. The NCMEC CyberTipline received 16.9 million reports, which included 69.1 million CSAM, from various Electronic Service Providers from across the globe. Close to 2 million of these reports are from India, making us the biggest contributor and consumer of online CSAM.

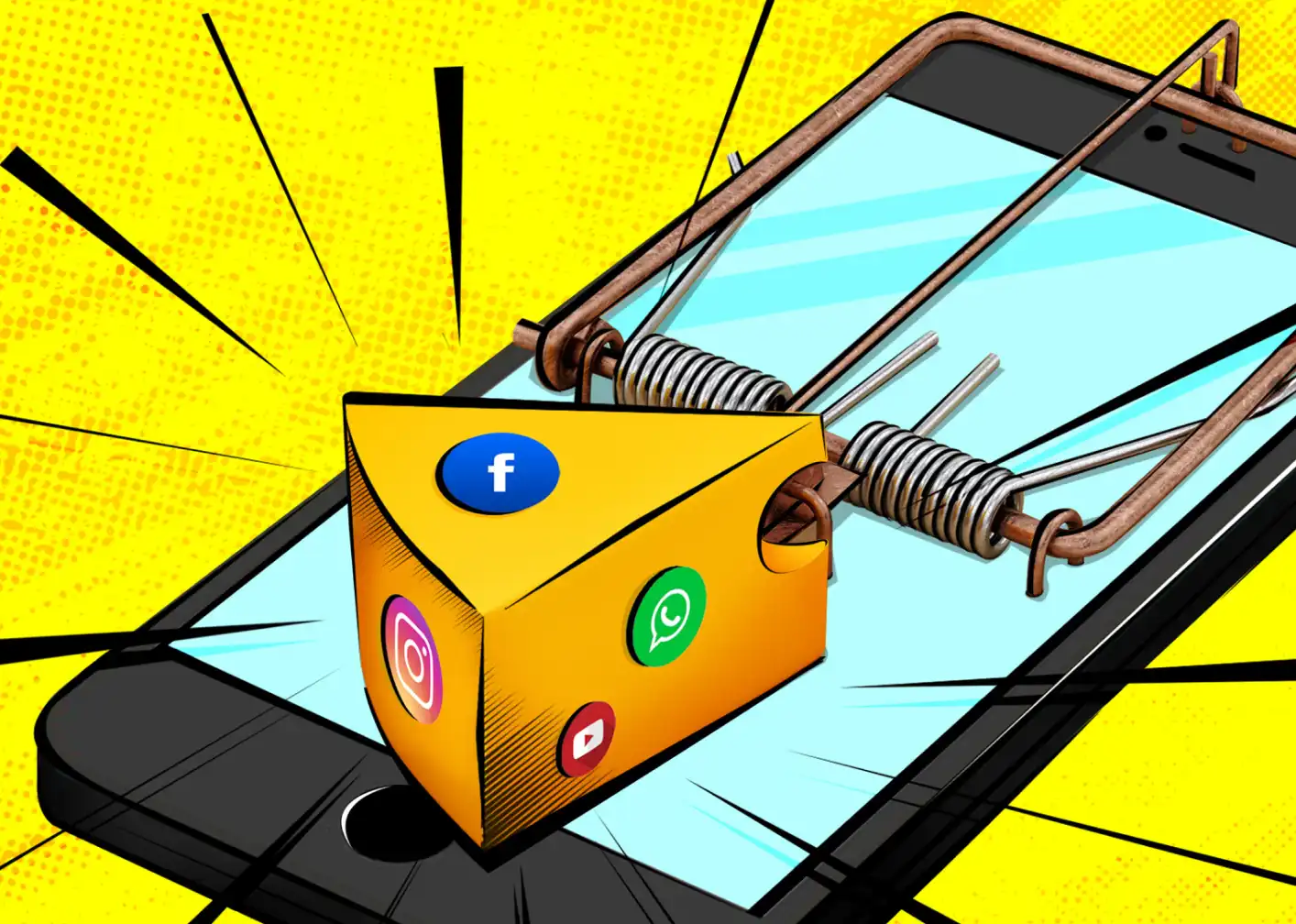

CSAM is available everywhere— online chat rooms, video games, and, most dangerously, on ubiquitous OTT platforms that children are exposed to on a daily basis. This implies that over 53 crore children —the Indian population below 18— are at risk of sexual abuse online. We are in a desperate need to develop a good understanding and find a solution. India has 500 million smartphones in use and is expected to have 639 million active internet users by the end of 2020. The number and popularity of OTT platforms in India is increasing every year. Yet, we lack the awareness of safe digital behaviour. Besides CSAM, this project will also encompass our reporting on other issues pertaining to digital misuse that could include non consensual intimate imagery, bullying and other aspects of cybercrimes.