It is a mid August morning in 2019. Chris is in his office in Cambridge, 90 km north of London, when he receives a report from a young woman in India.

In the report, the woman writes that a video of hers was leaked onto the internet without her consent. She also shares a URL. When Chris clicks on it, a sexually explicit video of two teenagers – a boy and a girl – pops up.

“And that she was a minor at the time in which the video was produced. She was unsure of how the video was leaked online or who did it, but, obviously, had concerns that it was circulating,” says Chris.

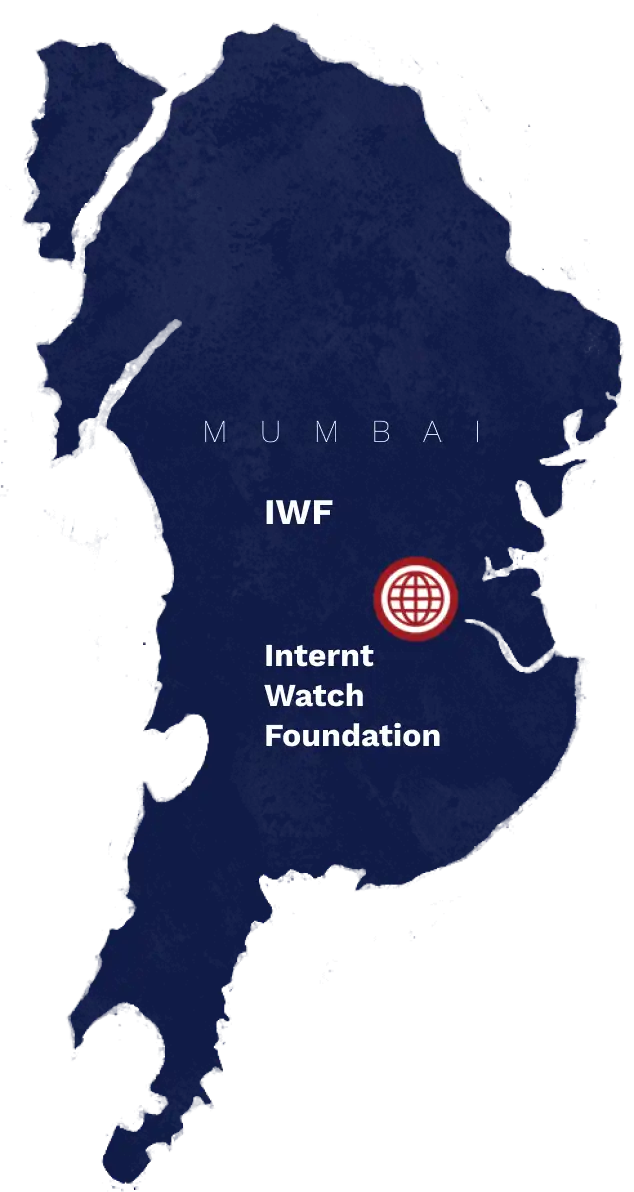

Chris and his team of 13 analysts deal with such imagery everyday. He is a Hotline Manager at the Internet Watch Foundation, which aims to eliminate child sexual abuse material (CSAM) online. The hotline accepts reports of CSAM, verifies them, and acts to have them taken off the internet.

The IWF Hotline in India is run in partnership with Aarambh, a Mumbai based NGO. When the hotline began operations in September 2016, it was the first of its kind in India. It remained the only one available to Indians to report CSAM till the government launched a national cybercrime reporting portal in January.

IWF is an independent, self-regulatory organisation. It was set up in 1996 by the internet industry to provide an internet Hotline for the public and IT professionals to report potentially criminal online content within their remit and to be the ‘notice and takedown’ body for this content. It works in partnership with the internet industry, police, governments, the education sector, charities across the world.

IWF is funded by the EU and member companies from the online industry, including internet service providers (ISPs), mobile operators, content providers, hosting providers, filtering companies, search providers, trade associations and the financial sector.

“In 2019, IWF analysts removed 132,700 webpages showing the sexual abuse of children”

Before taking action, Chris and his team need to verify two things.

First, They check if the video is sexually explicit.

“So, well, there was no doubt about the content of the video,” says Chris.

Next, they have to verify that it is CSAM. That the girl or/and boy featured in the video are children. This is an important prerequisite — only then can Chris act to get the video removed. Solely going by the video, his team is unable to reliably determine their ages.

“If you’re dealing with a three year old or a five year old, verification is not required; it’s obvious,” explains Chris. “But when you’re dealing with late teens, age verification is a crucial part of the jigsaw to enable us to get content removed without being challenged.”

By August 2019, when the woman reports the video, she is 25. But, her report mentions that the video was filmed when she was below 18. Any imagery featuring a child is CSAM.

Child sexual abuse (CSA) is criminal offense in most countries. However, the definition of CSA varies in different countries and so does the age of consent to sexual behaviour. In India, an adult’s sexual contact of any kind with a child —a person below the age of 18— is considered CSA. But, it also includes sexual depiction of a child in an electronic form and filming, publishing and even watching such imagery. CSA is illegal in India under various laws. Filming adult sexual activity or distributing

it come under various laws in different countries, including the IPC and the IT Act in the Indian law. But, most countries and websites have zero tolerance for CSAM, making its takedown easier, at least, in theory. The work of IWF and Chris’ deals specifically with content that involves children so, Chris’s team, on the same day, contacted the woman, asking for additional information to help verify her age.

“Without age verification, we wouldn’t have been able to confirm that this content involved minors. Other agencies that we’d pass the report for content removal would have struggled with age assessment.”

– Chris

For Naina, this isn’t the first time that this video has found its way on to the internet. Or the first time she is seeking to have it removed.

She first learnt that a video of hers was circulating on pornographic websites from an acquaintance in mid-2019.She registered a complaint at the police station, closest to her home in Rajasthan. But, the video remained online, compelling her to file a First Information Report (FIR).

FactorDaily has reviewed a copy of the FIR dated June 30, 2019. In it, she writes: “an acquaintance informed me that someone has posted an MMS on porn websites on the internet in my name and in several other names. When I checked it, I found a personal video of mine, which seems to have been filmed when I was still in school; when I was still a minor.”

She states that the video was manipulated and edited and then uploaded on the internet. “This was done without my knowledge and without my consent… The said video is uploaded on the internet by some (unknown) person; I don’t know about it. And even today this video is being shared by several people.”

She notes her worries about circulation of the video:

“If the video remains live on the internet, my whole life will be ruined and my family’s reputation will be tarnished. And my entire family would be affected by this. This will also ruin my personal life.”

– Naina

In the FIR, she seeks that the video be removed from the internet immediately. She also attaches a list of websites on which this video was available. She also urges the authorities to prosecute those who have uploaded the video.

In the entire process, she asks that her name not be disclosed, “to protect my and my family’s reputation”.The FIR failed in achieving what Naina sought since the video saw a second outbreak in August 2019 when it turned up on additional websites. Naina and Vicky**, the boy who featured in the video, emailed, with a copy of the FIR, asking the websites to remove the video. But, it didn’t work. This is when Naina reported it on the IWF Hotline. Still, the video remained online.

Then, Vicky called Aarambh India, IWF’s portal partner in Mumbai. Siddharth Pillai, who manages the online safety work at Aarambh, took over the case.

Siddharth

Meanwhile, Siddharth also received an email from Naina complaining that her request to IWF was not processed. Siddharth flagged the video to IWF. He also checked with IWF on the status of Naina’s report. On August 19, Chris got back, mentioning the issue with age verification.

The day after Chris asked for an age verification document, he receives a copy of the FIR from Naina. But, his team can’t verify its authenticity since they can’t read it. It is in Hindi.

“We consulted with the victim if they would mind if we forwarded the document for verification to our Indian portal partners (Aarambh). The victim agreed to this,” says Chris.

Now, Naina and Vicky inform Siddharth about the existence of the FIR. “This was the first time I came to know that they had earlier filed an FIR and that it was in Hindi. This is what they had emailed to individual websites with takedown requests and probably this is why no website acted on it.”

The next day, Siddharth sends an English translation of the FIR to Chris. It ascertains that Naina was born in 1994 (She was 25 when she reported the video). The FIR shows that the police had filed her complaint under Section 67 B of the IT (Amendment) Act 2008, which deals with publishing or transmitting material depicting children in sexually explicit acts in electronic form. This implies that the victims—Naina and Vicky— were children when the video was shot. So, the video is CSAM.

Now, Chris springs into action— he has to have the video removed from the internet.For that, he first needs to find every URL where the video is present. The report from Naina contains one URL. But, what if it’s available on other websites, he thinks.The analysts, who investigated the reported URL, found that the video was accompanied with some tags or keywords. The keywords are baits for the viewer.

They often carry descriptions of the video, names (usually pseudonyms) of the people featured in the video, or the city they are in, in an offer of proximity or titillation. Similarly, most adult websites offer the viewer a thumbnail— a photo — of the video’s content. Chris is aware that the same video posted on different websites often uses the same keywords and thumbnail.The search begins.Several analysts in his team, proactively, search for it. “Analysts,” insists Chris. “Plural.”

Chris

Meanwhile, in Mumbai, Siddharth has taken up searching for the video, independently. Most websites, when they receive complaints from victims that a content is CSAM and/ or is uploaded without the consent of people featured in it, take it off.

This is what Siddharth is targeting, in a process parallel to what Chris is doing. With an English translation of the FIR, this might work, he thinks.

He is using the same technique of keyword search and reverse image search using the thumbnail.

Siddharth

Chris is right. A lot of websites are using the same thumbnail for this video. His team’s search around keywords locates additional instances of the video.

“We traced the content to a number of different locations and certainly on at least 10 separate websites, and the content was actually hosted in four different countries— UK, US, Hungary and the Netherlands.”

– Chris

“The websites that we found the content on were what most people would classify as legal adult websites,” Chris says. “It’s possible for people to upload content of a minor on even legitimate legal websites, where the website potentially doesn’t know the age of the individuals. And therefore someone has abused the ability to be able to upload content onto a site. In this particular instance, these were relatively mainstream adult websites that the content was posted on.” And, this is why verifying the age of the victims was so crucial to him.

Some of these websites are using only the photo (the thumbnail) while others used both the photo and the original video. He often sees manipulation of the original video circulating on several websites. “But in this particular instance, I don’t believe there was any additional manipulation of the video. It was just a straightforward copy. Or very, very similar.”

Meanwhile, Siddharth’s search is also yielding results. He has received some impassioned help in locating this video— from the victims themselves. “Everyday, they would wake up and look for the websites where the video had popped up recently; they were randomly searching for them on the open internet. The boy was sending me a bunch of URLs everyday,” says Siddharth.

They come across a dozen of URLs that contain this video and some of them have been manipulated with— they are edited, chopped or cropped; some have a changed aspect ratio, says Siddharth.

The viral video is on several popular porn sites, from swankily designed popular ones to jerrybuilt obscure ones.Some of them are source websites where the video is uploaded. Many others are gateway websites— they just embed the thumbnail and redirect to the video using the URL of the source website. These are often shoddily put together and, sometimes, don’t even have a proper mechanism to report criminal content, Siddharth says.“Since several versions of the video— manipulated and modified— have popped up on the internet, it is hard to determine which is the original material and when and where it is uploaded first”, he adds.

Siddharth

Now, Siddharth emails individual websites with takedown requests; he attaches a translation of the victim’s statement as recorded in the FIR.

The video is also on some sites owned by MindGeek, a Montreal based private company that runs several pornographic websites and adult film production companies. MindGeek is a member of the Association of Sites Advocating Child Protection and uses the “Restricted To Adults (RTA)” label to identify its pornographic websites.

But, Siddharth says, some accounts that posted this video are verified by MindGeek. A communications manager at MindGeek confirmed that websites under the MindGeek umbrella that are adult are all marked with the RTA label.

“When the content is posted from a verified account, it is always more difficult to take down. This is what we faced.”

– Siddharth

FactorDaily reached out to three adult websites operated by Mindgeek

The New York times reported in 2020 that PornHub has a huge problem with CSAM. Pornhub has discovered 118 instances of CSAM on its platform over three years, a Pornhub Spokesperson wrote in an email.

“Over 11.6 million

instances were discovered on Facebook in three months

244,188

individual users were suspended from Twitter in six months

By no means am I defending 118 instances one is too many but this context really helps to paint the picture of where the true issue with CSAM on the internet is.

He wrote:“ Our content moderation goes above and beyond the recently announced, internationally recognized Voluntary Principles to Counter Online Child Sexual Exploitation and Abuse.The platform utilizes a variety of automated detection technologies such as

- CSAI Match

- YouTube’s proprietary technology for combating Child Sexual Abuse Imagery content online

- Content Safety API

- Google’s cutting-edge artificial intelligence tool that helps detect illegal imagery

- PhotoDNA

- Microsoft’s technology that aids in finding and removing known images of child exploitation

Vobile, a state-of-the-art fingerprinting software that scans any new uploads for potential matches to unauthorized materials to protect against any banned video being re-uploaded to the platform

PornHub hasn’t answered questions specific to Naina’s video and numbers of CSAM upload and consumption from India. The other two platforms are yet to respond.

For Siddharth, dealing with MindGeek websites turns out the easiest. “These sites have a content reporting and removal system in place. It is quicker and simpler to contact them.” In less prominent websites, even reaching out to them is tough since they don’t offer a portal to complain. For these, Siddharth writes to the webmaster and complains about the content.

Chris

Now that Chris’ team has the list of websites the video is on, their objective is to have it removed from each of them. They proceed to issue notices to each of the different host countries.

“The content was hosted in four different countries. So we had to produce different reports and disseminate that information to four different locations around the world. So you can quickly see just from one report, the true international nature of it. Dealing with reports of this nature requires a lot of coordination and cooperation with different agencies around the world,”

– Chris

The video hosted in the UK is taken down within hours since the IWF can issue NTD (Notice and Takedown) requests directly to websites hosted in the UK. Take down in other countries takes several more days.

In Mumbai, Siddharth has started noticing that the video was being taken down on a few websites, including ones whom he had written to. Some of them were taken down even before the IWF took action, he says.

“We seem to have been able to take down all but two links. These two are on obscure websites that are now seemingly non-functional,” says Siddharth. “I couldn’t get any correspondence from the websites themselves. I think they have abandoned the practice of reverting to every report which is something they used to do.”

Since the IWF has issued take down notices, Siddharth is seeing less and less of the video on the internet.

The boy has started sending fewer and fewer URLs to him. And, then, by August 23, 2019, he stops altogether.

Still, Chris’s team keeps looking for the video on the internet, just in case. Till mid September 2019— over a period of 26 days since Naina first reported the video— they keep coming across more instances, apart from the initial 10 websites that they linked directly to Naina’s report. They create more reports and pursue removal in each case.

“I can confirm that all the reported content was removed and the majority (60%) was removed within five days,”

– Chris

Naina and Vicky, two teenage, high school students film a video.

Naina finds her video on porn websites.

Naina makes a police complaint to take the video off the internet.

Naina files an FIR after police complaint sees no action.

Naina and Vicky email video platforms to remove their video.

The video is viral.

Vicky calls Aarambh to help have the video removed.

Vicky emails Aarambh a list of websites that have the video.

Naina emails Aarambh that her reports to IWF aren’t going through. Aarambh flags the case to IWF.

IWF Day 1

Naina reports to IWF hotline a website that has her video.

IWF verifies its sexual content but is unable to verify that Naina and Vicky are minors in the video.

IWF asks Naina for documents to verify her age.

IWF Day 2

IWF receives a copy of FIR from Naina but is unable to verify it since it’s not in English.

IWF asks Aarambh to help verify the document to prove Naina’s age.

Aarambh sends an English translation of FIR to IWF

IWF verifies Naina was a minor when the video was filmed.

Vicky emails Aarambh a list of five websites which haven’t taken down the video request .

Aarmabh has succeeded in taking down content from all but two websites that Vicky reported

60% of content reported by IWF is removed

Vicky stops sending Aarambh more URLs

Over 26 days, IWF proactively searches and finds more websites, creates reports and issues notices

All the reported content has been removed

So, right now, the internet seems clear of the video. Siddharth hasn’t received another complaint from Naina or Vicky. And, Chris’s team hasn’t come across another website that is using the video. While this is reassuring, it hardly means the danger is over for Naina and Vicky.Once something has made its way onto the internet, the difficult truth is that it’s almost impossible to put the genie back in the bottle, says Chris. “Sometimes people are lucky that something appears online very briefly and no one had an opportunity to copy the image and to spread it, or to download it and then use a later date. But more often, once something’s posted online, other people will make a copy. There’s no 100% fail-safe to stop.”

Won’t a hash in Chris database stop that from happening?Even if an image has been hashed, there are no guarantees that it will not appear online again, says Chris. Some people use ways to get around that, he explains, without giving details for fear others might emulate them. “It (hashing) is a useful tool. It’s part of the arms race against the proliferation of content online, but it’s not a magic bullet in that sense.”

Siddharth

“Even if an image has been hashed, there are no guarantees that it will not appear online again”

– Chris